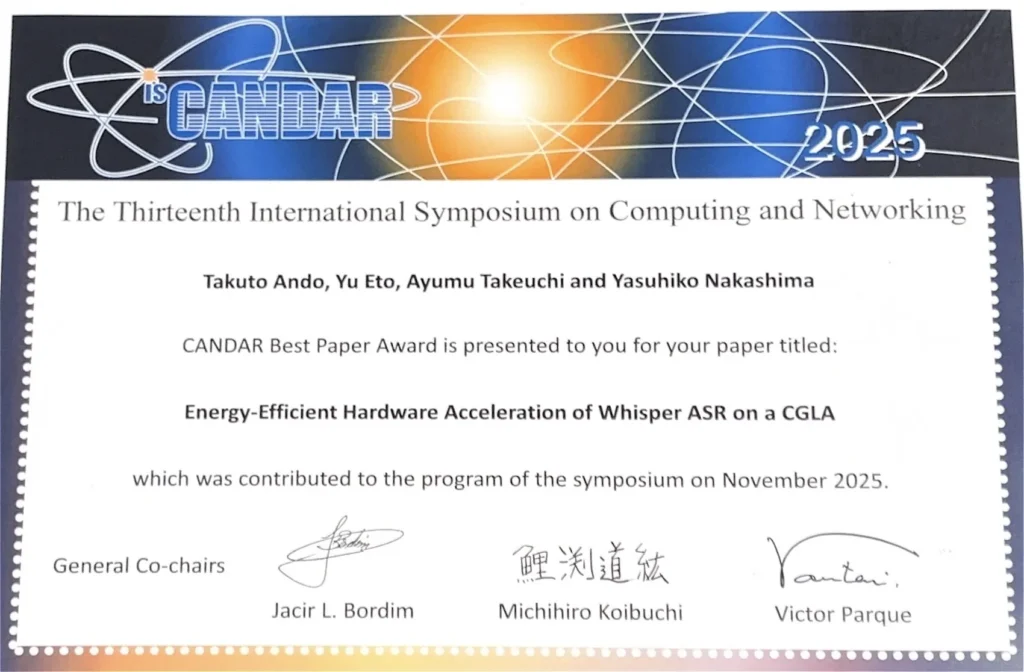

NAIST Computing Architecture Lab's Youtube channel published a new video featuring a lecture by LENZO's Co-Founder & Chief Architect , Prof. Yasuhiko Nakashima.

In this talk, Prof. Nakashima shares perspectives on training environments for semiconductor engineers and broader directions for future accelerator technologies, including AI and cryptography.

A central theme of the lecture is the idea that “Architecture Leads System Design,” highlighting how architectural choices shape system-level performance, efficiency, and long-term adaptability. The video is published as a knowledge-sharing resource and is available to watch in full below.